The Lie We Tell Ourselves About Customer Research

We don’t buy because of what we say — we buy because of what our subconscious decides.

To build synthetic users that truly act like people, we must stop chasing perfect logic and start engineering the "gut" instincts and emotional biases that drive real human decisions.

At Synthetic Users, our job is simple to say and hard to do: replicate human behaviour well enough that teams can run user research faster, cheaper, and earlier.

We do that today with Large Language Models (LLMs), retrieval systems, and careful prompting. This gets you surprisingly far. But there’s a deeper scientific reason why we believe the field can go further, and why the next leap isn’t “more reasoning” but better guts.

This post ties together three threads:

To understand why users behave the way they do, we look to neuroscientist Antonio Damásio (a personal hero of ours and it's not because he is Portuguese). He makes a critical distinction that most people miss:

The "Somatic Marker" (The Gut Check)

Damásio’s Somatic Marker Hypothesis argues that our bodies "tag" situations with value. When you face a choice, these markers fire as fast "gut signals" that bias your decision before you start logically analyzing the pros and cons.

If you damage the part of the brain that handles these markers, you don’t lose IQ. You lose the ability to make decisions. You can list the pros and cons of a washing machine for three hours, but you can't pick one.

Why this matters for UX Research and Synthetic Users:

Most products don't fail because users can't logically explain features. They fail because users:

These are Somatic Marker behaviours. They aren't rational calculus; they are emotional biases. If we want Synthetic Users to behave like your real customers, we can't ignore this layer.

Andrej Karpathy (and originally Daniel Kahneman) popularized a framework that is essential here:

The Industry Sprint to System 2

Right now, the AI industry is obsessed with making models smarter (System 2). They are building methods like "Chain-of-Thought" (forcing the AI to think step-by-step) to solve math problems and write code.

The Synthetic User Problem

This is great for a coding assistant, but it can be fatal for a synthetic user.

If you simply scale up System 2 reasoning, you get a hyper-rational agent.

Imagine a synthetic user evaluating a sketchy Fintech app:

A "System 2" AI might say:

"The interest rate is 2% higher than the market average, and the onboarding flow is efficient. I will sign up."

A Real Human (System 1) might say:

"The logo looks cheap and they asked for my SSN too fast. I don't trust it. I'm out."

The real human reaction happens in under two seconds. It’s a gut check. If synthetic users are too logical, they will accept products that real humans would reject. We don't just need smart models; we need models that can simulate human irrationality.

The goal isn't to build a biological brain, but to build a system that replicates human preference patterns. To do that, the AI architecture needs "guts."

Here are the practical levers that could be used to achieve this:

Humans don't always deliberate. We often choose by instinct. To mimic this, models can be trained on data that reflects rapid categorization and "first answer that comes to mind" scenarios. This nudges the model away from writing a dissertation and toward giving a reaction.

The human brain is an energy-miser. We don't solve a logic puzzle every time we buy toothpaste.

This can be mimicked in AI by limiting the "compute" budget for certain decisions. By forcing the model to answer quickly without "thinking/revising" too deeply, you get outputs that rely on heuristics (mental shortcuts)—just like a tired user shopping on their phone at 8 PM.

Humans don't just think in words; we think in states (anxious, bored, excited).

A "guts-first" model would ideally possess a dynamic internal state based on established psychological dimensions (like the Circumplex Model of Affect):

Example Scenario:

By maintaining this "mood state" across a session, a synthetic user would behave consistently. They wouldn't just analyze a product; they would react to it based on their simulated emotional state.

Gut instincts are shaped by history. To be believable, a synthetic user needs a persistent memory of "past experiences" to form biases. If a synthetic user "had a bad experience" with a subscription model in its backstory, it should instinctively hesitate when it sees a "Subscribe" button today.

If we take Damásio seriously, we can't just slap a persona on a chatbot. A truly effective synthetic user stack needs:

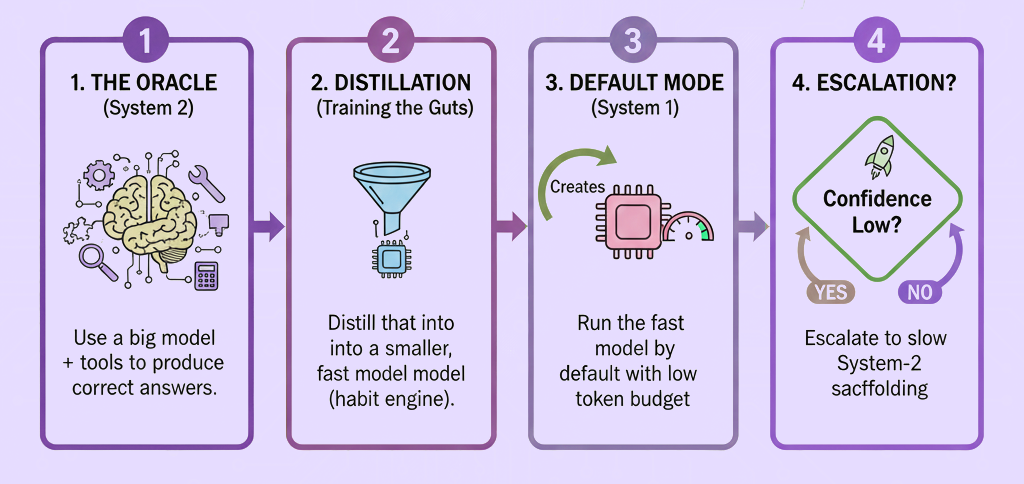

Here’s a practical end-game:

This is a first pass at mirroring human cognition: Gut first, reasoning second.

On Neuroscience & Emotion

On AI & System 1/2